| CS-534: Packet Switch Architecture

Spring 2004 |

Department of Computer Science

© copyright: University of Crete, Greece |

1.3 Multiplexing, Demultiplexing, Statistical Multiplexing, Output Contention

|

[Up - Table of Contents] [Prev - 1.2 Elastic Buffers] |

[1.4 Point-to-Point vs Buses - Next] |

1.3.1 Multiplexing and Demultiplexing: Shared Link Communication

The simplest form of networking is like the simplest form of programming: sequential --rather than parallel. In this simplest form of networking, all information, from all sources, is placed on one, shared link; then, each destination selects the information it desires, from this shared link. As we first saw in section 1.1.3, the component that aggregates information from multiple sources to one place is called multiplexor, and the component that distributes information from one place to multiple destinations is called demultiplexor; we repeat here the first figure from that section. Shared link networking is simple

because there is no "space diversity"

--everything goes through one, common place--

and because all activity is sequential:

only one thing happens at a time, on the single, common link;

in other words, there is no parallelism.

We also refer to this shared link networking as time switching,

because one piece of information is only differentiated from another

based on the time at which

each of them appears on the shared link,

given the lack of space diversity;

this is further discussed in §2.1.

Shared link networking is obviously non-scalable:

the shared link must have sufficient throughput

to carry the aggregate traffic from all sources.

As the number of sources grows,

and as the throughput of each source grows,

their aggregate throughput

soon exceeds the capacity of any practically implementable link.

Yet, the basic ideas of shared-link networking

can be found --as building block components-- in all other forms of

higher-performance, multi-link networks.

Shared link networking is simple

because there is no "space diversity"

--everything goes through one, common place--

and because all activity is sequential:

only one thing happens at a time, on the single, common link;

in other words, there is no parallelism.

We also refer to this shared link networking as time switching,

because one piece of information is only differentiated from another

based on the time at which

each of them appears on the shared link,

given the lack of space diversity;

this is further discussed in §2.1.

Shared link networking is obviously non-scalable:

the shared link must have sufficient throughput

to carry the aggregate traffic from all sources.

As the number of sources grows,

and as the throughput of each source grows,

their aggregate throughput

soon exceeds the capacity of any practically implementable link.

Yet, the basic ideas of shared-link networking

can be found --as building block components-- in all other forms of

higher-performance, multi-link networks.

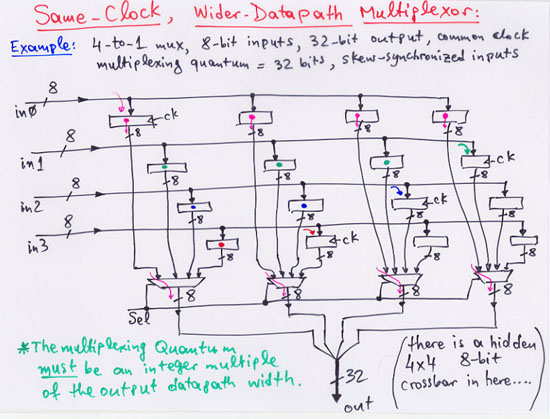

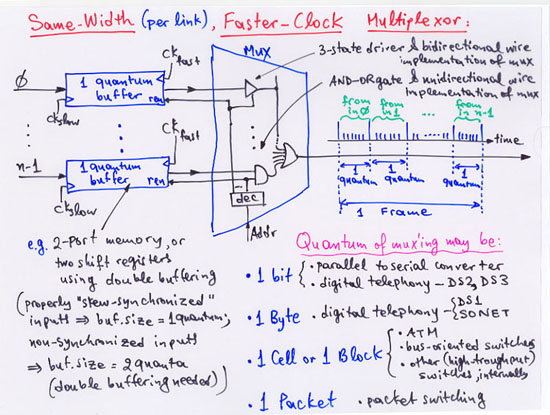

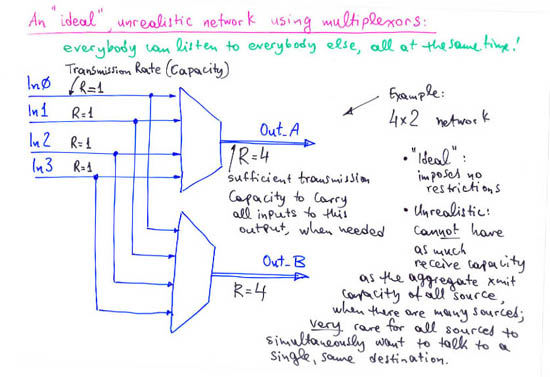

Implementation Issues: Multiplexors can be implemented using unidirectional wires (gathering all input links to one physical location, then using AND-OR digital gates), or they may be implemented in a physically distributed way, using bidirectional wires driven from multiple physical locations via tri-state driver digital gates (bus architecture). Unidirectional wires are preferable in high-throughput systems, because multi-driver buses suffer from considerable overheads, as discussed in the next section (1.4). The output link of a multiplexor must run faster (have higher transmission rate) than individual input links. This can be achieved using a faster clock (first transparency below), or, in case we've already reached the clock frequency limit, using a wider link (second transparency below) (the wide link system has a hidden "space switching" flavor in it).

Demultiplexors are sets of circuits that all monitor a shared link and each selects an appropriate subset of the information on that link, buffers it in a memory element, and transmits its (normally at a lower rate) on one of a set of outgoing links. Examples of demultiplexing circuits were seen in section 1.1.5; by comparing them to the multiplexing circuits of §1.1.4, the resembance of the two becomes apparent. Based on this similarity --or reversal or mirror relation-- the topics on multiplexors discussed in the previous subsection can be applied to demultiplexors.

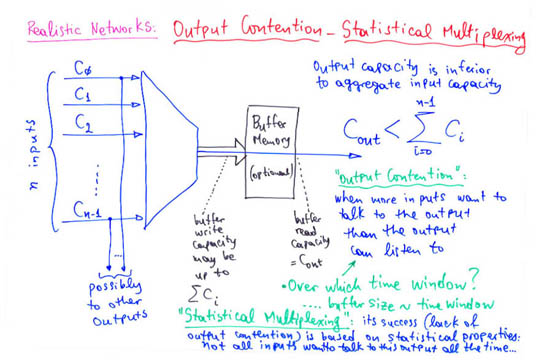

1.3.2 Statistical Multiplexing, Output Contention

|

[Up - Table of Contents] [Prev - 1.2 Elastic Buffers] |

[1.4 Point-to-Point vs Buses - Next] |

| Up to the Home Page of CS-534

|

© copyright

University of Crete, Greece.

Last updated: 3 Apr. 2004, by M. Katevenis. |